U of T team working to address biases in artificial intelligence systems

Posted Jul 26, 2021 11:48 am.

Last Updated Jul 27, 2021 12:30 pm.

A University of Toronto team has launched a free service to address biases that exist in artificial intelligence (AI) systems, a technology that is increasingly used all around the world, that has the potential to have life-changing impacts on individuals.

“Almost every AI system we tested has some sort of significant bias,” says Parham Aarabi, a professor at the University of Toronto. “For the past few years, one of the realizations has been that these AI systems are not always fair and they have different levels of bias. The challenge has been that, it’s been hard to know how much bias there is and what kind of bias there might be.”

Earlier this year Aarabi, who has spent the last 20 years working on different kinds of AI systems, and his colleagues started HALT, a University of Toronto project launched to measure bias in AI systems, especially when it comes to recognizing diversity.

AI systems are used in many places, including in airports, by governments, health agencies, police forces, cell phones, social media aps, and in some cases by companies during hiring processes. In some cases, it’s as simple as walking down the street and having your face recognized.

However, it’s humans who design the data and systems that exist within an AI system, and that’s where researchers say the biases can be created.

“More and more, our interactions with the world are through artificial intelligence,” Aarabi says. “AI is around us and it involves us. We believe that if AI is unfair and has biases, it doesn’t lead to good places so we want to avoid that.”

The HALT team works with universities, companies, governments, and agencies that use AI systems. It can take them up to two weeks to perform a full evaluation, measuring the amount of bias present in the technologies, and the team can pinpoint exactly which demographics are being left out or impacted.

“We can quantitatively measure how much bias there is, and from that, we can actually estimate what training data gaps there are,” says Aarabi. “The hope is, they can take that and improve their system, get more training data, and make it more fair.”

To help their clients or partners, the team also provides a report along with guidelines on how the evaluated AI system can be improved and be made more fair.

Each case is unique, but Aarabi and his team have so far worked on 20 different AI systems and found that the number one issue has been the lack of training data for certain demographics.

“If what you teach the AI is bias, for example, you don’t have enough training data of all diverse inputs and individuals, then that AI does become bias,” he says. “Other things like the model type and being aware of what to look at and how to design AI systems, also can make an impact.”

The HALT team has worked to evaluate technology which includes facial recognition, images, and even voice-based data.

“We found that even dictation systems in our phones can be quite biased when it comes to dialect,” Aarabi says. “Native English speakers, it works reasonably well on. But if people have a certain kind of accent or different accents, then the accuracy level can drop substantially and usability of these phones becomes less.”

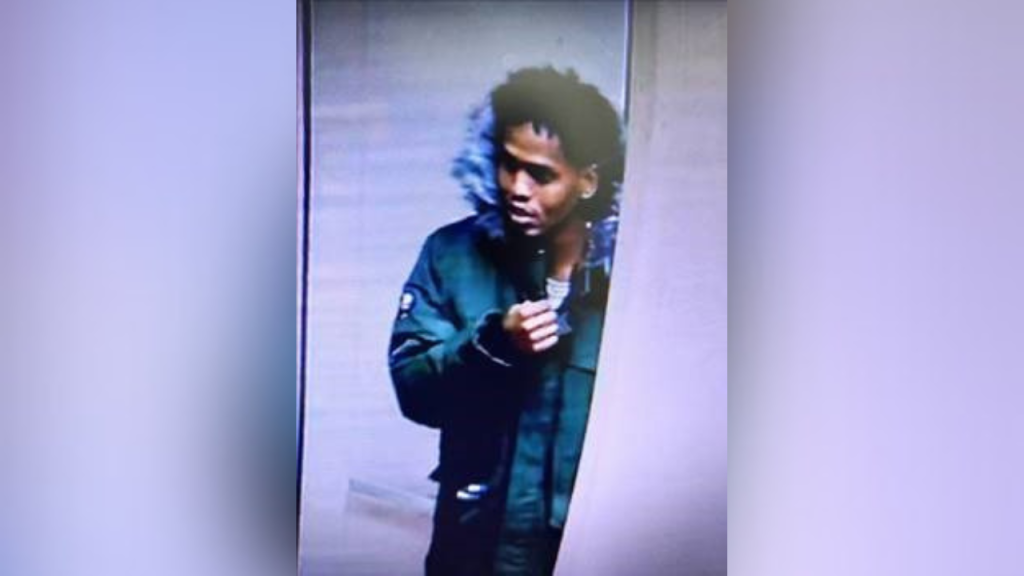

Facial recognition has faced increased scrutiny over the years, as experts warn of the potential it has to perpetuate racial inequality. In parts of the world, the technology has been used by the criminal justice system and immigration enforcement, and there have been reports that the technology has led to the to wrongful identification and arrests of Black men in the U.S.

The American Civil Liberties Union has called for the stopping of face surveillance technologies, saying facial technology “is racist, from how it was built to how it is used”.

Privacy and Ethics around AI systems

With the persisting use of these technologies, there have been calls and questions around the regulation of AI systems.

“It’s very important that when we use AI systems or when governments use AI systems, that there be rules in place that they need to make sure that they’re fair and validated to be fair,” Aarabi says. “I think slowly governments are waking up to that reality, but I do think we need to get there.”

Former three-term Privacy Commissioner of Ontario, Ann Cavoukian, says most people are unaware of the consequences of AI and what its potential is in terms of positive and negatives, including biases that exist.

“We found that the biases have occurred against people of colour, people of Indigenous backgrounds,” she says. “The consequences have to be made clear, and we have to look under the hood. We have to examine it carefully.”

Earlier this year, an investigation found that the use of Clearview AI’s facial-recognition technology in Canada, violated federal and provincial laws governing personal information.

In response to the investigation, it was announced that the U.S. firm would stop offering its facial-recognition services in Canada, including Clearview suspending its contract with the RCMP.

“They slurp people’s images off of social media and use it without any consent or notice to the data subjects involved,” says Cavoukian, who is now the Executive Director of the Global Privacy and Security by Design Centre. “3.3 billion facial images stolen, in my view, slurped from various social media sites.”

Until recently, Cavoukian adds that law enforcement agencies were using the technology unbeknownst to police chiefs, most recently the RCMP. She says it’s important to raise awareness about what AI systems are used, and what their limitations are, particularly in their interactions with the public.

“Government has to ensure that whatever it relies on for information that it acts on, is in fact accurate and that’s largely missing with AI,” Cavoukian says. “The AI has to work equally for all of us, and it doesn’t. It’s bias, so how can we tolerate that.”

RELATED: Canadian Civil Liberties Association has ‘serious concerns’ about CCTV expansion in Ontario

Calls to address bias in AI aren’t only happening in Canada.

Late last month, the World Health Organization issued it’s first global report on Artificial Intelligence in health, saying the growing use of the technology comes with opportunities and challenges.

The technology has been used to diagnose, screen for diseases and support public health interventions in their management and response.

However, the report — which includes a panel of experts appointed by WHO — points out the risks of AI, including biases encoded in algorithms and the unethical collection and use of health data.

The researchers say AI systems trained to collect data from people in high-income countries, may not perform the same for others in low and middle income places.

“Like all new technology, artificial intelligence holds enormous potential for improving the health of millions of people around the world, but like all technology, it can also be misused and cause harm,” read a quote by Dr. Tedros Adhanom Ghebreyesus, WHO’s Director-General.

“This important new report provides a valuable guide for countries on how to maximize the benefits of AI, while minimizing its risks and avoiding its pitfalls.”

The health agency adds that AI systems should be carefully designed and trained to reflect the diversity of socio-economic and healthcare settings. Adding that governments, providers and designers should all work together to address the ethical and human rights concerns at every level of AI system’s design and development.